The robotaxi operator introduced the Waymo World Model, a generative “world model” for simulating autonomous driving situations. It is built on Genie 3, which Google DeepMind describes as the company’s most advanced general-purpose world model, and has been adapted to the specific requirements of road traffic.

“The strong world knowledge of Genie 3, acquired through pretraining on an extremely large and diverse set of videos, enables us to explore situations our fleet has never directly observed,” Waymo writes.

Waymo calls simulation one of the three central pillars of its safety approach. While the Waymo Driver has logged nearly 200 million fully autonomous miles in the real world, it “drives” billions of miles in virtual worlds before encountering scenarios on public roads.

Waymo argues that simulating rare scenarios better prepares the Waymo Driver for complex situations. However, the company does not provide specific benchmark results or independent evaluations of the model in its announcement.

Pretrained world knowledge instead of pure driving data

According to Waymo, most simulation models in the industry are trained exclusively on a company’s own driving data—limiting the system to a narrow range of experience. The Waymo World Model takes a different approach: it leverages the broad world knowledge Genie 3 gained from pretraining on an extremely large and diverse video dataset.

Through specialized post-training, this 2D video knowledge is translated into 3D LiDAR outputs tailored to Waymo’s hardware suite. The model generates both camera and LiDAR data: cameras capture visual detail, while LiDAR provides precise depth information as a complementary signal.

This is intended to enable simulations of scenarios Waymo’s fleet has never directly observed—for example, an encounter with an elephant, a tornado, a flooded residential area, or even snow on tropical roads lined with palm trees.

Three control mechanisms for counterfactual scenarios

Waymo says a key feature of the Waymo World Model is fine-grained controllability via three mechanisms:

-

Driving action control enables counterfactual “what if” scenarios—such as whether the Waymo Driver could have driven more assertively in a given situation. Unlike purely reconstructive methods such as 3D Gaussian splats, which can visually collapse when routes deviate, the generative model is designed to maintain realism and consistency.

-

Scene layout control allows adjustments to road geometry, traffic-light states, and the behavior of other road users.

-

Language control is described as the most flexible tool: text prompts can specify time of day, weather, or generate entirely synthetic scenes.

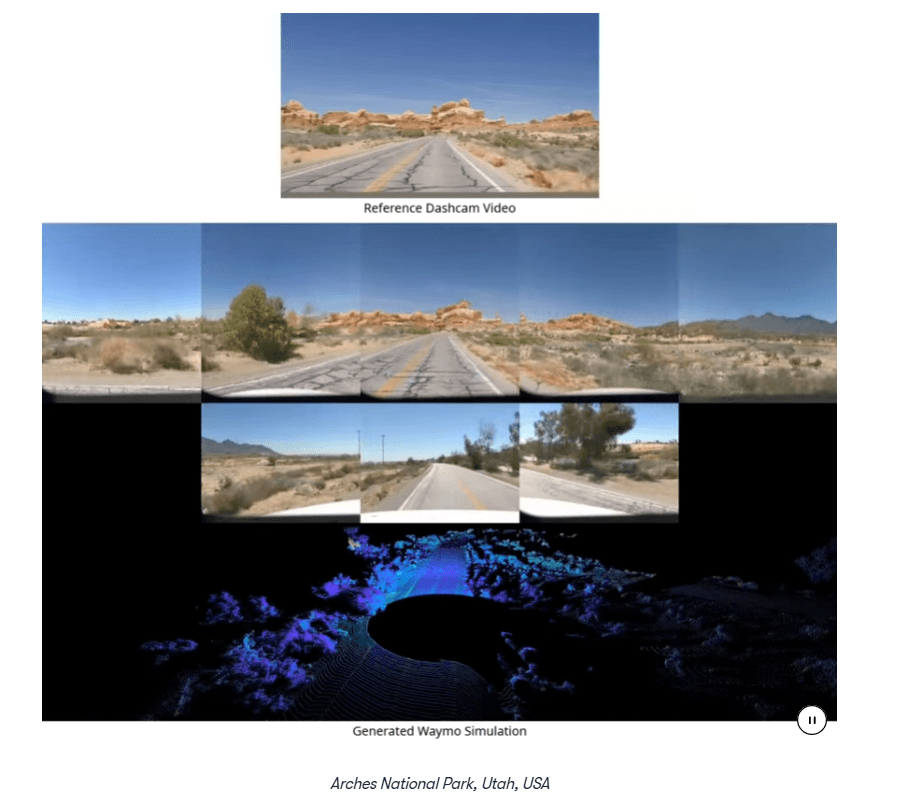

Another capability: the model can convert ordinary videos—such as dashcam or smartphone footage—into multimodal simulations showing how the Waymo Driver would perceive the scene through its sensors.

From a simple dashcam video recorded at Arches National Park in Utah, the Waymo World Model can produce a full multimodal simulation with multi-camera views and a 3D LiDAR point cloud.

Longer simulations—such as negotiating right of way in a narrow alley—are more computationally intensive and harder to keep stable. To address this, Waymo developed a more efficient variant of the model that can generate longer scenes with, according to the company, “dramatically reduced compute” while maintaining high quality—making large-scale simulation runs feasible.

ES

ES  EN

EN